If you are considering embedded or white-labelled analytics solutions, this post will help to explain why it’s paramount for you to first understand the analytic capability of your software before taking action.

As a product manager, you will need to determine what constitutes a Minimum Viable Product (MVP) before launching it. It is critical to first examine the existing software’s analytical capability and address areas for improvement.

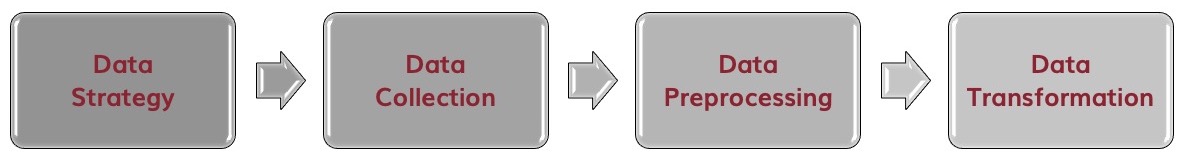

The ‘Embedded Analytics Maturity Curve’ is a useful strategic assessment framework that product managers and software owners can use to help plan out their implementation. It outlines defined phases of the overall product journey, and can be used as a roadmap to formulate analytical development, adoption and long-term strategy.

This visual framework focusses on the usability of business intelligence analytics, and maps out the development effort required to reach the desired target.

The curve indicates the ideal trajectory of an analytics solution. As the product matures, it’s analytical capability and data value increase, ultimately becoming a more sophisticated piece of software.

By following this structured progress model, you can better determine and learn:

- The evolution of your analytics product

- Where improvements are required to move it to the next stage of maturity

- How the final product will deliver value through automated in-context workflows, reducing effort for both the developer and end user.

ANALYTICS MATURITY STAGE 1 – No Capability

If you are at the beginning of building a Minimum Viable Product (MVP) and getting it ready for market, then you are at stage 1 of the analytics maturity curve. At this stage your software is likely to be purely transactional, without methods to analyse data such a dashboards and reports.

You may have decided to ship your product, perhaps as a proof of concept, with the intention of including analytic functionality in the future. However, what’s important to consider here is the user’s requirements, because these analytic constraints may pose severe problems when trying to introduce sophisticated features later on.

Key reasons to evolve:

- clients are demanding more access to their data

- you’re losing to competitors with reporting and data access API capabilities

- lack of access to data and insights is the reason for lost deals

Signs that you’re ready for the next stage:

- you have a good grasp of client’s information needs

- you have the required data platform expertise within your organisation

- your data structure is stable

ANALYTICS MATURITY STAGE 2 – Data Exports

At this stage you are providing data export tools such as CSV downloads or API access. This is to cater for clients that now recognise the need for report building and data consumption to guide their decision-making.

If your users can only access their data using an external solution then this presents limitations. It means they need to build their analysis from scratch and manage the data pipeline outside of your software. The disparate nature of the analytic experience becomes burdensome and time-consuming. Plus, the data is in its’ raw format, which may not represent an accurate picture for meaningful insights.

Exporting a CSV from your software and uploading it to a third-party BI tool for analysis, requires a user to keep switching back and forth between the two for data context. This creates a disjointed experience overall and without guidance on how and where to start, they could become easily frustrated.

Key reasons to evolve:

- clients are integrating data into their own reporting solutions but struggling to build meaningful reports

- you want to charge for data access but data exports provide little value to justify this

Signs that you’re ready for the next stage:

- you have access to resources who understand your data model and can define and build basic reporting

- you have a clear set of basic reporting requirements from your user base that is common across many clients

- you have an underlying data structure that can accommodate reporting workloads without impacting performance

ANALYTICS MATURITY STAGE 3 – Basic Reporting

This stage is typically marked by the introduction of an in-house developed analytical solution or basic operational reporting capabilities, where users can build basic parameter-driven reports within an application. However, the set of dashboards and reports options are usually limited, and users cannot create their own custom analysis.

The user’s need to make quick decisions, based on reliable insights that are immediately available creates a new challenge. Requests for new reports mean that developers can struggle to keep up with demand. This can potentially slow down development of the core product.

Key reasons to evolve:

- your clients are requesting more sophisticated insights

- your clients want to give access to senior management and tabular reports don’t cut it

- competitors are innovating with data and have a more targeted sales and marketing approach

Signs that you’re ready for the next stage:

- you are able to define and measure KPIs in your data that are common across clients

- you can define views of your data that can be combined into executive or operational dashboards

- you understand what your competitors are offering and how you can match their offering or create a new unique selling point

ANALYTICS MATURITY STAGE 4 – Standalone Dashboard and Reporting Module

And now for the embedding of real-time reports, dashboards and data visualisations into your software!

This is where you can offer a true self-service reporting experience that enable users to create their own bespoke analytic content, using pre-defined, secure data sets.

Clients will have better access to data via standalone modules (dashboards/reports) with the ability to create bespoke reports, which frees up the developer’s time. Business Intelligence analytics become more feature-rich and user-friendly, providing higher value for users and reduced workload for your development team.

The challenge at this stage is to ensure users make optimal use of your embedded software. You need to ensure that they remain focussed within your application, without having to switch to external sources for context. The easier it is for them to discover insights, the less likely they will be distracted from their workflows.

Key reasons to evolve:

- having a competitive edge in your analytics offering is essential to your strategy

- you may have churned customers to competitors looking for greater analytics sophistication

- you see key advantages for your users in enabling analytics at the point of consumption

Signs that you’re ready for the next stage:

- your data model is highly mature and performant

- you have mature data and analytics capability or partners who provide that skillset

- you have UX expertise that can help design and combine analytics into your core application workflows

ANALYTICS MATURITY STAGE 5 – Contextual Analytics

You’ve made it – embedded analytics takes a giant leap into contextual analytics!

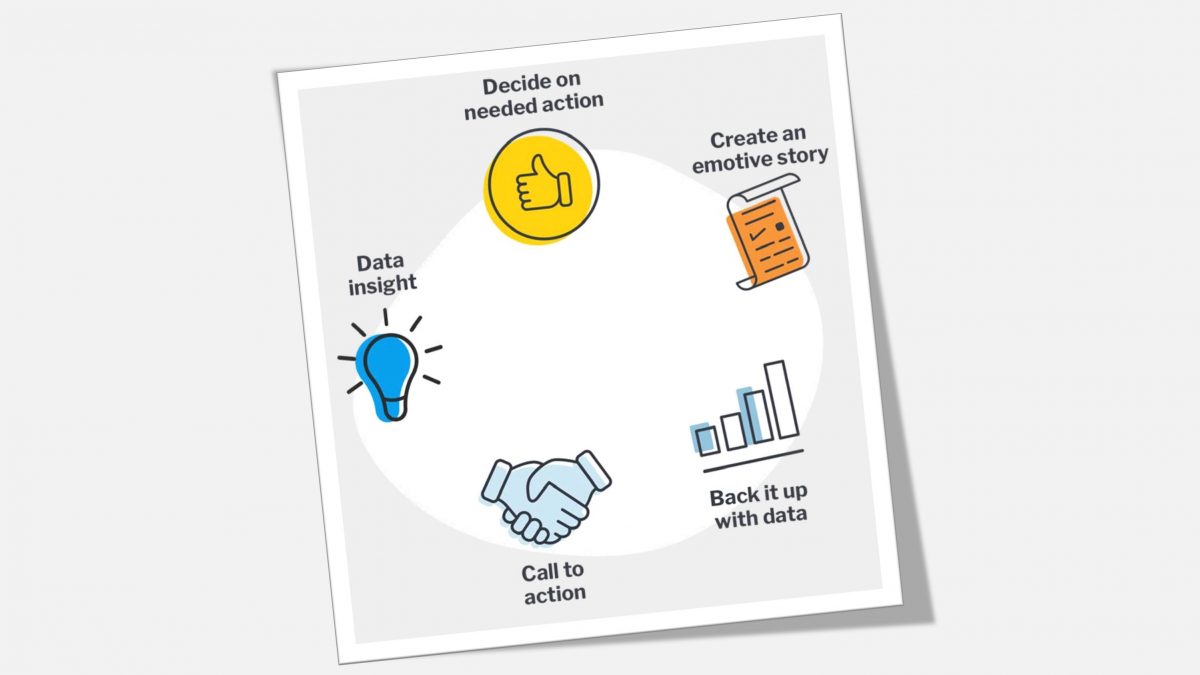

Integrating components like, charts, tables, dashboards, alerts, and visualisations. Delivering them directly in the user’s interface and core transaction workflow.

Users have access to relevant data and insights in real-time, right at the point when they need to take timely action. They may not even realise they’re using analytics because much of the data will be pre-defined, automated and seamless.

Contextual analytics is really the best way to fully optimise the use of your core software and future-proof it. Providing a high quality experience to users significantly increases the business benefits for everyone.

Improving your chances for maturity

Progressing through the five stages of the embedded analytics maturity curve is a journey not a race, and can be achieved by every team regardless of their data skills.

A critical assessment of your current analytics capabilities and areas for improvement is the critical first step. Being clear about how well your software meets the curve criteria, what value it offers business users and where it may fall short.

It may be useful to look at lessons learned from other mature organisations in similar industries. Their successful use cases can inspire your own product initiatives.

Achieving an exceptional analytics offering is reliant on aligning your product’s data maturity and embedded maturity. You can’t try to get to Stage 5 insights while your data is at Stage 2. Start to prepare your data ahead of migration. Don’t limit yourself to just one stage, look further still, right to your end goal.

Get ready for embedded and contextual analytics

Ultimately, taking the time to examine the state of your data, people and technologies in-depth can provide valuable guidance in maturing your software’s analytical capabilities, and even be a much needed wake-up call.

With the availability of modern solutions like Yellowfin that make the adoption and implementation of embedded and contextual analytics as seamless and streamlined as possible, there is no better time to begin assessing your product’s current analytical maturity.

Talk to us to find out how you can start planning the introduction of new and innovative features that will transform the way your users engage with data, and make better informed decisions sooner.